- Graphisoft Community (INT)

- :

- Archicad AI Visualizer

- :

- Forum

- :

- Re: Best prompts and settings

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this post for me

- Bookmark

- Subscribe to Topic

- Mute

- Printer Friendly Page

This group is dedicated to the experimental AI Visualizer for Archicad 27, which will reach End of Service on December 31, 2024.

The new AI Visualizer in Archicad 28 is now fully cloud-based, with advanced controls like creativity and upscaling. Click here for further details.

Best prompts and settings

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-11-17 01:28 PM

Do you have the "perfect prompt" or have you found wonderful balance of the settings that works for you and want to share with others? Don't keep your best prompts and settings for yourself, but share it with the community and check what others are discussing.

GRAPHISOFT Senior Product Manager

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-12 08:54 AM

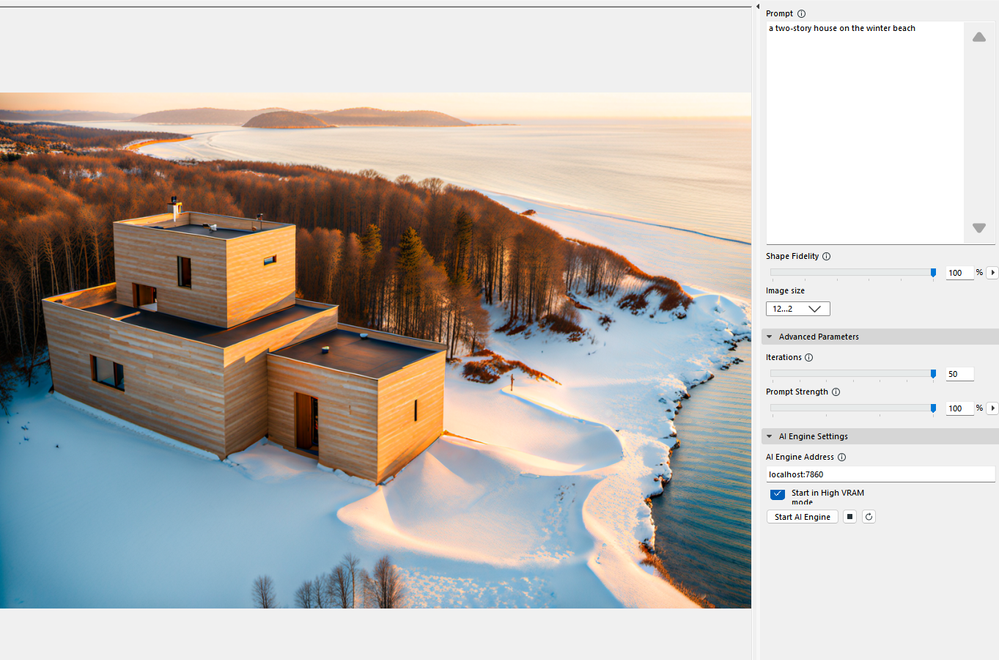

Nice enough image, but I think it ignored just about every one of your prompts?

Barry.

Versions 6.5 to 27

i7-10700 @ 2.9Ghz, 32GB ram, GeForce RTX 2060 (6GB), Windows 10

Lenovo Thinkpad - i7-1270P 2.20 GHz, 32GB RAM, Nvidia T550, Windows 11

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-12 08:57 AM

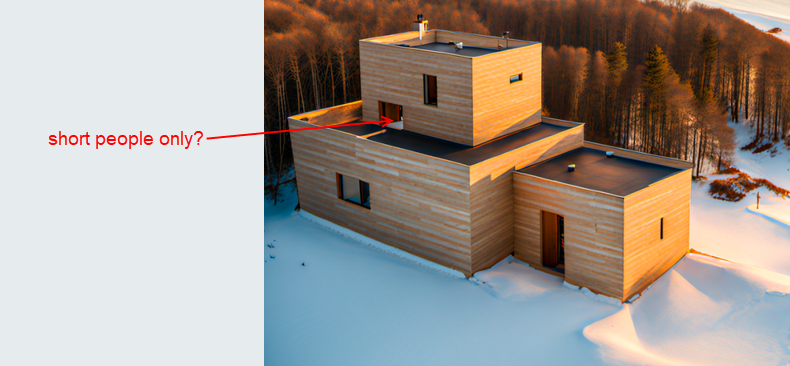

And now it has added a third floor and created a terrace that you can't access?

Barry.

Versions 6.5 to 27

i7-10700 @ 2.9Ghz, 32GB ram, GeForce RTX 2060 (6GB), Windows 10

Lenovo Thinkpad - i7-1270P 2.20 GHz, 32GB RAM, Nvidia T550, Windows 11

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-12 09:00 AM

You don't know what kind of image would have been without the prompt

The prompt is applied to some extent

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-12 09:02 AM

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-12 03:06 PM

Hey Scoz! Very nice results, thank you for posting!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-13 03:13 AM - edited 2024-02-13 03:14 AM

@Jeno Barta wrote:

Hey Scoz! Very nice results, thank you for posting!

Seriously?

You think that is good?

The quality of the image might be OK, but the content is rubbish.

This AI just seems to do what it wants without any real guidance from the user.

The background look great, but the interpretation of the models is atrocious.

I am not having a go at Scoz's images, it is all down to the AI which at the moment just doesn't work in my opinion.

I really hope it improves greatly.

Barry.

Versions 6.5 to 27

i7-10700 @ 2.9Ghz, 32GB ram, GeForce RTX 2060 (6GB), Windows 10

Lenovo Thinkpad - i7-1270P 2.20 GHz, 32GB RAM, Nvidia T550, Windows 11

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-13 09:12 AM - edited 2024-02-13 09:14 AM

Hey Barry Kelly!

Thanks, i do think that you have good points here, but you have to approch this technology a bitt differently (for now at least). Almost everybody on the market who provides generative AI based, image generation services on buildings (in the AEC sector) markets this solution as "rendering". However we market this solution as what it is capable right now: Inspiration, as AI is not capable of rendering right now.

Generative adversarial networks (GANs) has no idea what they are generating. They have no context about your goals, your motivations, basically what you want. To make things worse the generator is only just one part of the equation, the other part is the Language Model that trained to understand our natural language, which also has no context, or any understanding, but only tries to translate our words to numbers that can recall a certain pattern from the generative model. And there are the last part, Us and how we could vocalise our goals, and needs. If you talk to another person who is way more intelligent then a GAN AI, and way more capable to understand you, sometimes another person cannot understand what we want, because we are not 100% in vocalising our thoughts.

When it comes to AI its even worse, and this domain is called "prompt engineering", or to figure out how a certain AI best understands us.

From that AI Visualizer (Stable Diffusion and its custom components) won't create perfect results. It's going to create something, that tries to resamble your needs. Sometimes its good (based on a "lucky seed" and your prompting, etc.) and sometimes it's bad. But right now the goal is not perfect results. the goal is good enough results to inspire you.

Sure you could focus on the mistakes, like small doors, gaps, inconsistancies like there are no access to a platform, but you could also focus on what can you get out of an AI generated idea that inspire you. What about the surfaces, the whole "vibe" of the generated scene, the additional parts that just generated onto the building? If the generated image not 100% precise, and makes sense, you really cannot take any inspirations, ideas from it?

So Yes! Based on what I said above I do think they are really good results. They are does not makes sense architecturally on a lots of parts, they have artifacts, but they have a lots of inspirative parts too, that can give you ideas, concepts to think about, and can give a general idea how an idea would roughly look like visually.

However AI technology is developing like no other. Soon we going to have less artifacts on the images, way faster generation times (like GANs will generate in real time, as we moved from static rendering to real time rendering), and will understands us more precisely. We going to have the opportunity to finetune GANs to have more context, understanding of architecture, etc.

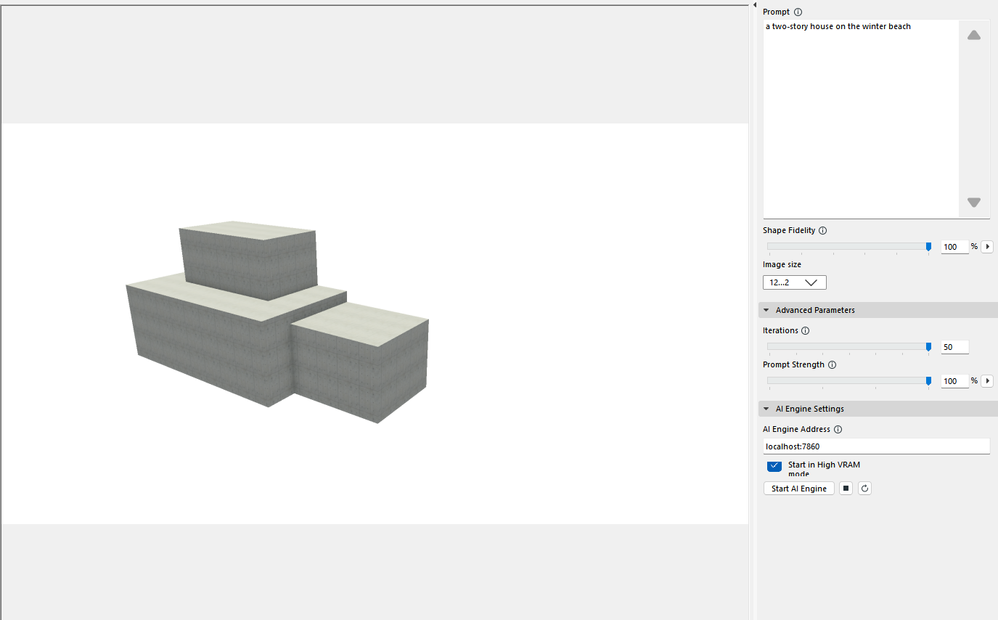

So we going to have better and better results in the future, but right now we have this, which I really do think is amaznig. We can generate, not 100% perfect, but I think good enought building concepts from 2-3 grey blocks, that can give us ideas, inspiration for further design, in like 30-60 seconds (or 5 seconds on very fast machines...).

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-13 10:39 AM

@Jeno Barta wrote:However AI technology is developing like no other. Soon we going to have less artifacts on the images, way faster generation times (like GANs will generate in real time, as we moved from static rendering to real time rendering), and will understands us more precisely.

But it still gonna be a pain to model and work with it in AC - make architectural sense of it - because ever fundamental CAD/BIM functionality has been neglected while GS experiment with things like this. You are right in that it is a bit misguided to question the current result, as it is what it is, but what can and should be questioned is why GS is putting the AI Visualiser front and center? Hopefully it's just opportunistic marketing and nothing that will affect any long term strategy but its obviously taking up resources that surely could have gone to better use.

The bottom line is that the majority of users doesn't rely on AC for divine inspiration but rather for it to facilitate and support a designprocess that takes place in a increasingly complex and competitive environment. An environment which leaves no margin for workflows that aren't leveraged by computational power. But while GS chase down new costumers with AI Visualiser and Apple Vision Pro compatibility current users still have to get by every day without proper groups/systems, instances, types, dynamic arrays and modern visibility control - resulting in unnecessary manual work.

The lack of basic functionality also puts a limit on prospect of useful AI integration in AC - or should the AI be expected to fiddle around with favorites, multiply and layer combinations as needed? Yet there is one obvious first AI step to take that would have a real impact on everyday AC workflows and that is natural language prompted criteria expressions - but I guess there wasn't a Stable Diffusion strap on that boot.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-13 10:59 AM

I also see this AI renderer as a first preview of future possibilities.

As ArchiCAD users, we are of course used to more precision and possibilities for intervention, but that's why it's interesting to see what this technology can produce from the model with just a little information.

I therefore carried out tests with uninformed people and showed them the rendering results without any further explanation. This has shown me that it will be difficult for the profession of architect in the future.

You could now create a long wish list of how the renderer should work. But it is more important to see what the future will look like:

- A voice recognition of the user (i.e. talking to the system)

- Inclusion of the actual real environment (e.g. using the Apple VisionPro to tell the renderer to use the current view of the VisionPro as a background)

- Very good support in formulating the requirements for the renderer

- Results with various problem detections (e.g. doors too low) and possible solutions

- Creation of the correct plans and drawings for the rendered results.

When this technology has matured so far, the question remains: why do we still need planners?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-13 11:20 AM

Well, if we completely ignore the aspect of human experience and the actual building of the house then sure - what's the point of the architect? But I rest assured that there is meaning to the profession for the foreseeable future (even considering unimageable advancements of AI). I'm more concerned about the fact that we are hamstrung by applications that doesn't deliver on the potential of CAD/BIM but rather forces us to hoop jump to achieve the most basic results.