- Graphisoft Community (INT)

- :

- Archicad AI Visualizer

- :

- Forum

- :

- problems with installing the AI Engine with AMD Ra...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this post for me

- Bookmark

- Subscribe to Topic

- Mute

- Printer Friendly Page

This group is dedicated to the experimental AI Visualizer for Archicad 27, which will reach End of Service on December 31, 2024.

The new AI Visualizer in Archicad 28 is now fully cloud-based, with advanced controls like creativity and upscaling. Click here for further details.

problems with installing the AI Engine with AMD Radeon RX 6700 XT

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-04 07:41 AM - edited 2023-12-04 07:42 AM

When I want to start the AI engine it says:

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)]

Version: v1.6.0

Commit hash: 5ef669de080814067961f28357256e8fe27544f4

Launching Web UI with arguments: --api --listen --api-server-stop --no-half-vae --skip-torch-cuda-test

no module 'xformers'. Processing without...

no module 'xformers'. Processing without...

No module 'xformers'. Proceeding without it.

Warning: caught exception 'Found no NVIDIA driver on your system. Please check that you have an NVIDIA GPU and installed a driver from http://www.NVIDIA.com/Download/index.aspx', memory monitor disabled

2023-12-04 07:34:30,355 - ControlNet - INFO - ControlNet v1.1.410

ControlNet preprocessor location: C:\sd.webui\webui\extensions\sd-webui-controlnet\annotator\downloads

2023-12-04 07:34:30,476 - ControlNet - INFO - ControlNet v1.1.410

Loading weights [31e35c80fc] from C:\sd.webui\webui\models\Stable-diffusion\sd_xl_base_1.0.safetensors

ERROR: [Errno 10048] error while attempting to bind on address ('0.0.0.0', 7860): normalerweise darf jede socketadresse (protokoll, netzwerkadresse oder anschluss) nur jeweils einmal verwendet werden

Creating model from config: C:\sd.webui\webui\repositories\generative-models\configs\inference\sd_xl_base.yaml

Running on local URL: http://0.0.0.0:7861

Applying attention optimization: InvokeAI... done.

loading stable diffusion model: RuntimeError

Traceback (most recent call last):

File "threading.py", line 973, in _bootstrap

File "threading.py", line 1016, in _bootstrap_inner

File "threading.py", line 953, in run

File "C:\sd.webui\webui\modules\initialize.py", line 147, in load_model

shared.sd_model # noqa: B018

File "C:\sd.webui\webui\modules\shared_items.py", line 110, in sd_model

return modules.sd_models.model_data.get_sd_model()

File "C:\sd.webui\webui\modules\sd_models.py", line 499, in get_sd_model

load_model()

File "C:\sd.webui\webui\modules\sd_models.py", line 649, in load_model

sd_model.cond_stage_model_empty_prompt = get_empty_cond(sd_model)

File "C:\sd.webui\webui\modules\sd_models.py", line 534, in get_empty_cond

d = sd_model.get_learned_conditioning([""])

File "C:\sd.webui\webui\modules\sd_models_xl.py", line 31, in get_learned_conditioning

c = self.conditioner(sdxl_conds, force_zero_embeddings=['txt'] if force_zero_negative_prompt else [])

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\webui\repositories\generative-models\sgm\modules\encoders\modules.py", line 141, in forward

emb_out = embedder(batch[embedder.input_key])

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\webui\modules\sd_hijack_clip.py", line 234, in forward

z = self.process_tokens(tokens, multipliers)

File "C:\sd.webui\webui\modules\sd_hijack_clip.py", line 273, in process_tokens

z = self.encode_with_transformers(tokens)

File "C:\sd.webui\webui\modules\sd_hijack_clip.py", line 349, in encode_with_transformers

outputs = self.wrapped.transformer(input_ids=tokens, output_hidden_states=self.wrapped.layer == "hidden")

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\system\python\lib\site-packages\transformers\models\clip\modeling_clip.py", line 822, in forward

return self.text_model(

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\system\python\lib\site-packages\transformers\models\clip\modeling_clip.py", line 740, in forward

encoder_outputs = self.encoder(

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\system\python\lib\site-packages\transformers\models\clip\modeling_clip.py", line 654, in forward

layer_outputs = encoder_layer(

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\system\python\lib\site-packages\transformers\models\clip\modeling_clip.py", line 382, in forward

hidden_states = self.layer_norm1(hidden_states)

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\webui\extensions-builtin\Lora\networks.py", line 474, in network_LayerNorm_forward

return originals.LayerNorm_forward(self, input)

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\normalization.py", line 190, in forward

return F.layer_norm(

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\functional.py", line 2515, in layer_norm

return torch.layer_norm(input, normalized_shape, weight, bias, eps, torch.backends.cudnn.enabled)

RuntimeError: "LayerNormKernelImpl" not implemented for 'Half'

Stable diffusion model failed to load

Exception in thread Thread-18 (load_model):

Traceback (most recent call last):

File "threading.py", line 1016, in _bootstrap_inner

File "threading.py", line 953, in run

File "C:\sd.webui\webui\modules\initialize.py", line 153, in load_model

devices.first_time_calculation()

File "C:\sd.webui\webui\modules\devices.py", line 148, in first_time_calculation

linear(x)

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl

return forward_call(*args, **kwargs)

File "C:\sd.webui\webui\extensions-builtin\Lora\networks.py", line 429, in network_Linear_forward

return originals.Linear_forward(self, input)

File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\linear.py", line 114, in forward

return F.linear(input, self.weight, self.bias)

RuntimeError: "addmm_impl_cpu_" not implemented for 'Half'

To create a public link, set `share=True` in `launch()`.

Startup time: 21.9s (prepare environment: 0.6s, import torch: 5.2s, import gradio: 1.5s, setup paths: 1.3s, initialize shared: 0.5s, other imports: 1.0s, setup codeformer: 0.2s, load scripts: 1.6s, create ui: 0.6s, gradio launch: 9.3s, add APIs: 0.1s).

As I see it, it wants an NVIDIA GPU.

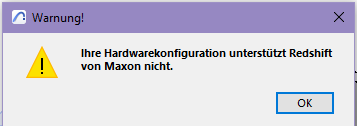

Just a note: Redshift also doens´t work

Anyone had a similar problem?

Solved! Go to Solution.

- Labels:

-

installation

-

settings

-

Windows

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-05 12:00 PM

Hi,

It is not planned yet, you can install the Add-on on a device that meets the requirements (as a server) and use the Add-on on your device (as a client). For more info please check this post.

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-04 01:19 PM - edited 2023-12-04 01:19 PM

Hi Planconsort,

Thanks for the question.

The AI Visualizer Add-on is compatible with NVIDIA GPUs only on Windows.

And for Redshift we recommend NVIDIA GPU with CUDA compute capability 7.0 or higher and 10 GB of VRAM or more. (NVIDIA Quadro, Titan, or GeForce RTX GPU for hardware-accelerated ray tracing)

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-05 07:59 AM

So, does this mean I can't use none of those with this hardware at all, or is there a way it might work with AMD GPUs in the future, or a workaround now?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-05 12:00 PM

Hi,

It is not planned yet, you can install the Add-on on a device that meets the requirements (as a server) and use the Add-on on your device (as a client). For more info please check this post.

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-02-01 11:23 AM

Hi, I'm currently using Quadro K5200 for desktop which has CUDA capability of 3.5. Is it this the reason that my AI doesn't start or I could you use this GPU and looking for some other problem?