- Graphisoft Community (INT)

- :

- Archicad AI Visualizer

- :

- Forum

- :

- Re: AI Visualizer Technical questions

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Pin this post for me

- Bookmark

- Subscribe to Topic

- Mute

- Printer Friendly Page

This group is dedicated to the experimental AI Visualizer for Archicad 27, which will reach End of Service on December 31, 2024.

The new AI Visualizer in Archicad 28 is now fully cloud-based, with advanced controls like creativity and upscaling. Click here for further details.

AI Visualizer Technical questions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-11-17 01:25 PM - last edited on 2023-11-24 05:38 PM by Karl Ottenstein

We know that taking part in an experiment can be challenging from time to time. Do you have issues with installing or running the Archicad AI Visualizer Add-on? This is your place to ask your questions and initiate discussion with others about technical difficulties you may experience while trying to install or run the experimental add-on, or generate images.

GRAPHISOFT Senior Product Manager

- Labels:

-

installation

-

macOS

-

settings

-

Tips and tricks

-

Windows

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-06 12:49 PM

Hi Raygab,

Thanks for the question.

Did you set the computer name correctly? What do you mean by 'webinterface'?

Please contact your local support and we will investigate your issue.

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-09

09:31 PM

- last edited on

2023-12-17

02:04 AM

by

Laszlo Nagy

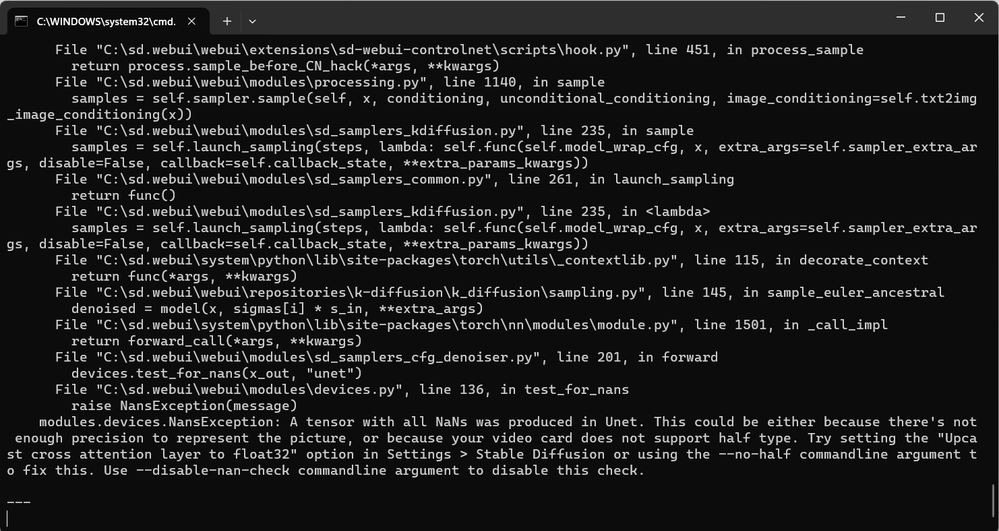

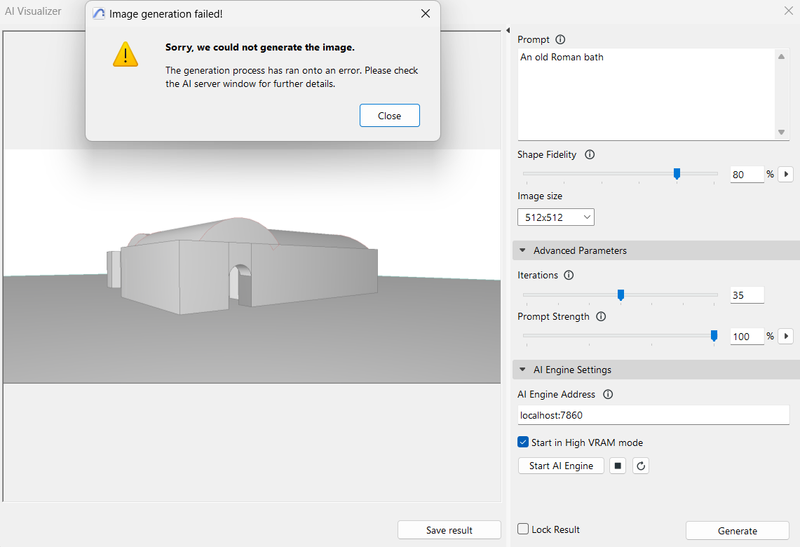

I am using Archicad 27 Turkish for student. (windows)

I followed install instructions and installed succesfully, i can open the engine but when I pressed to generate button it keep getting this errors.

I searched for a solution in the forum but cant find one, anyone can help?

16GB RAM

NVIDIA GTX1650 12GB (4GB VRAM)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-11 11:06 AM

Hi,

Thanks for the screenshots. Please note that the minimum requirement to run the Add-on is 8 GB of VRAM on Windows.

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-18

08:24 AM

- last edited on

2024-01-01

04:18 PM

by

Laszlo Nagy

I have downloaded the visualizer on my pc,

I found that when I login to the local administrator account, or run as administrator ,

everything is normal.

However, when I log in to the end user account, I cannot successfully execute the AI Engine.

Could you help with this?

PS. : NVIDIA GeForce RTX 3060 (12GB RAM)

Archicad 27 (4030 INT)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-18 08:53 AM

Hi, it turned out that the install was corrupted by something.

After redownloading and unpacking within the downloads directory and moving it from there to the root path everything worked even without being administrator.

By web interface I meant the web interface of the engine. Simply put I tried to connect with the browser and not from within archicad.

Kind regards,

Raygab

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-22 11:52 PM

i also have the same problem have you gotten an solution

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-28

10:43 PM

- last edited on

2024-01-01

04:19 PM

by

Laszlo Nagy

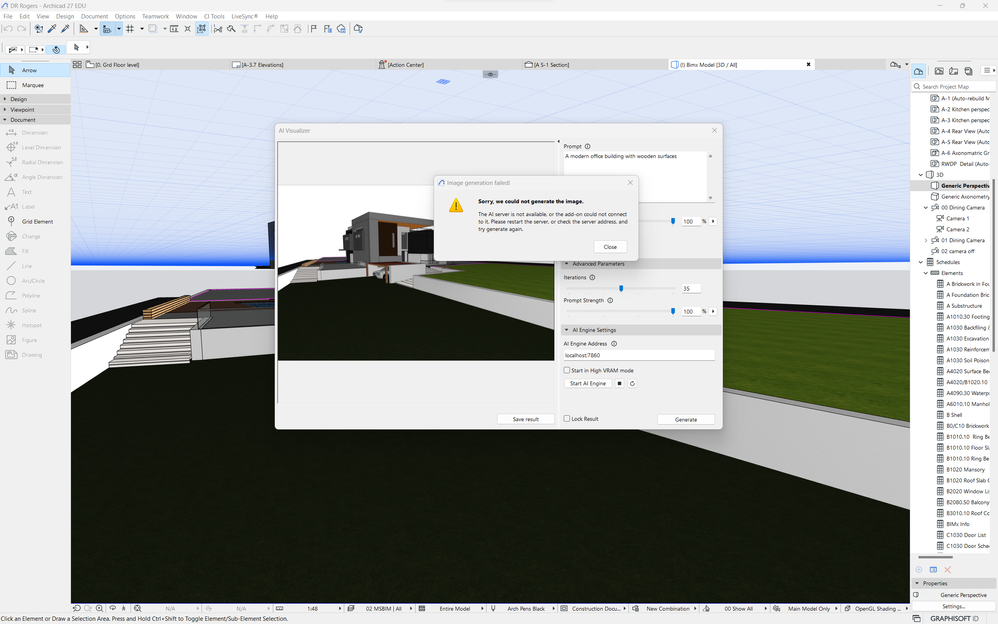

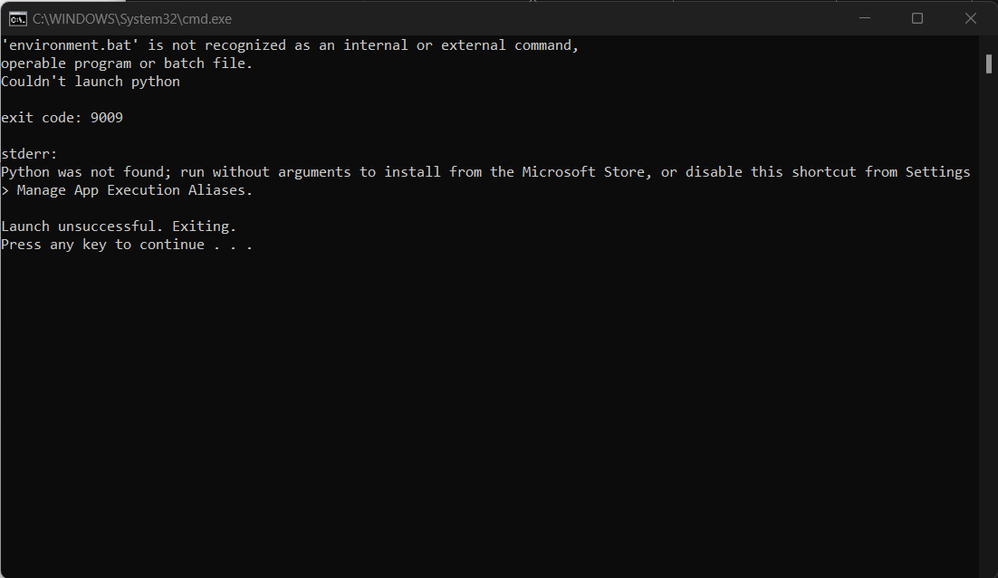

Hi!

I've an issue,

Python 3.10.6 (tags/v3.10.6:9c7b4bd, Aug 1 2022, 21:53:49) [MSC v.1932 64 bit (AMD64)] Version: v1.6.0 Commit hash: 5ef669de080814067961f28357256e8fe27544f4 Launching Web UI with arguments: --medvram --api --listen --api-server-stop --no-half-vae no module 'xformers'. Processing without... no module 'xformers'. Processing without... No module 'xformers'. Proceeding without it. 2023-12-28 22:31:46,137 - ControlNet - INFO - ControlNet v1.1.410 ControlNet preprocessor location: C:\sd.webui\webui\extensions\sd-webui-controlnet\annotator\downloads 2023-12-28 22:31:46,231 - ControlNet - INFO - ControlNet v1.1.410 Loading weights [31e35c80fc] from C:\sd.webui\webui\models\Stable-diffusion\sd_xl_base_1.0.safetensors Running on local URL: http://0.0.0.0:7860 Creating model from config: C:\sd.webui\webui\repositories\generative-models\configs\inference\sd_xl_base.yaml To create a public link, set `share=True` in `launch()`. Startup time: 13.9s (prepare environment: 2.5s, import torch: 2.8s, import gradio: 0.8s, setup paths: 0.8s, initialize shared: 0.2s, other imports: 0.6s, load scripts: 1.2s, create ui: 0.4s, gradio launch: 4.3s). Applying attention optimization: Doggettx... done. Model loaded in 10.1s (load weights from disk: 1.2s, create model: 1.6s, apply weights to model: 3.1s, calculate empty prompt: 4.0s). 2023-12-28 22:32:28,380 - ControlNet - INFO - Loading model: diffusion_pytorch_model [a2e6a438] 2023-12-28 22:32:28,611 - ControlNet - INFO - Loaded state_dict from [C:\sd.webui\webui\extensions\sd-webui-controlnet\models\diffusion_pytorch_model.safetensors] 2023-12-28 22:32:28,621 - ControlNet - INFO - controlnet_sdxl_config 2023-12-28 22:32:41,352 - ControlNet - INFO - ControlNet model diffusion_pytorch_model [a2e6a438] loaded. 2023-12-28 22:32:42,435 - ControlNet - INFO - Loading preprocessor: canny 2023-12-28 22:32:42,435 - ControlNet - INFO - preprocessor resolution = 600 2023-12-28 22:32:42,555 - ControlNet - INFO - ControlNet Hooked - Time = 14.34455680847168 0%| | 0/35 [00:02<?, ?it/s] *** API error: POST: http://localhost:7860/sdapi/v1/txt2img {'error': 'OutOfMemoryError', 'detail': '', 'body': '', 'errors': 'CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 4.00 GiB total capacity; 3.30 GiB already allocated; 0 bytes free; 3.47 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF'} Traceback (most recent call last): File "C:\sd.webui\system\python\lib\site-packages\anyio\streams\memory.py", line 98, in receive return self.receive_nowait() File "C:\sd.webui\system\python\lib\site-packages\anyio\streams\memory.py", line 93, in receive_nowait raise WouldBlock anyio.WouldBlock During handling of the above exception, another exception occurred: Traceback (most recent call last): File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 78, in call_next message = await recv_stream.receive() File "C:\sd.webui\system\python\lib\site-packages\anyio\streams\memory.py", line 118, in receive raise EndOfStream anyio.EndOfStream During handling of the above exception, another exception occurred: Traceback (most recent call last): File "C:\sd.webui\webui\modules\api\api.py", line 187, in exception_handling return await call_next(request) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 84, in call_next raise app_exc File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 70, in coro await self.app(scope, receive_or_disconnect, send_no_error) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 108, in __call__ response = await self.dispatch_func(request, call_next) File "C:\sd.webui\webui\modules\api\api.py", line 151, in log_and_time res: Response = await call_next(req) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 84, in call_next raise app_exc File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\base.py", line 70, in coro await self.app(scope, receive_or_disconnect, send_no_error) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\cors.py", line 84, in __call__ await self.app(scope, receive, send) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\gzip.py", line 26, in __call__ await self.app(scope, receive, send) File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\exceptions.py", line 79, in __call__ raise exc File "C:\sd.webui\system\python\lib\site-packages\starlette\middleware\exceptions.py", line 68, in __call__ await self.app(scope, receive, sender) File "C:\sd.webui\system\python\lib\site-packages\fastapi\middleware\asyncexitstack.py", line 21, in __call__ raise e File "C:\sd.webui\system\python\lib\site-packages\fastapi\middleware\asyncexitstack.py", line 18, in __call__ await self.app(scope, receive, send) File "C:\sd.webui\system\python\lib\site-packages\starlette\routing.py", line 718, in __call__ await route.handle(scope, receive, send) File "C:\sd.webui\system\python\lib\site-packages\starlette\routing.py", line 276, in handle await self.app(scope, receive, send) File "C:\sd.webui\system\python\lib\site-packages\starlette\routing.py", line 66, in app response = await func(request) File "C:\sd.webui\system\python\lib\site-packages\fastapi\routing.py", line 237, in app raw_response = await run_endpoint_function( File "C:\sd.webui\system\python\lib\site-packages\fastapi\routing.py", line 165, in run_endpoint_function return await run_in_threadpool(dependant.call, **values) File "C:\sd.webui\system\python\lib\site-packages\starlette\concurrency.py", line 41, in run_in_threadpool return await anyio.to_thread.run_sync(func, *args) File "C:\sd.webui\system\python\lib\site-packages\anyio\to_thread.py", line 33, in run_sync return await get_asynclib().run_sync_in_worker_thread( File "C:\sd.webui\system\python\lib\site-packages\anyio\_backends\_asyncio.py", line 877, in run_sync_in_worker_thread return await future File "C:\sd.webui\system\python\lib\site-packages\anyio\_backends\_asyncio.py", line 807, in run result = context.run(func, *args) File "C:\sd.webui\webui\modules\api\api.py", line 380, in text2imgapi processed = process_images(p) File "C:\sd.webui\webui\modules\processing.py", line 732, in process_images res = process_images_inner(p) File "C:\sd.webui\webui\extensions\sd-webui-controlnet\scripts\batch_hijack.py", line 42, in processing_process_images_hijack return getattr(processing, '__controlnet_original_process_images_inner')(p, *args, **kwargs) File "C:\sd.webui\webui\modules\processing.py", line 867, in process_images_inner samples_ddim = p.sample(conditioning=p.c, unconditional_conditioning=p.uc, seeds=p.seeds, subseeds=p.subseeds, subseed_strength=p.subseed_strength, prompts=p.prompts) File "C:\sd.webui\webui\extensions\sd-webui-controlnet\scripts\hook.py", line 451, in process_sample return process.sample_before_CN_hack(*args, **kwargs) File "C:\sd.webui\webui\modules\processing.py", line 1140, in sample samples = self.sampler.sample(self, x, conditioning, unconditional_conditioning, image_conditioning=self.txt2img_image_conditioning(x)) File "C:\sd.webui\webui\modules\sd_samplers_kdiffusion.py", line 235, in sample samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs)) File "C:\sd.webui\webui\modules\sd_samplers_common.py", line 261, in launch_sampling return func() File "C:\sd.webui\webui\modules\sd_samplers_kdiffusion.py", line 235, in <lambda> samples = self.launch_sampling(steps, lambda: self.func(self.model_wrap_cfg, x, extra_args=self.sampler_extra_args, disable=False, callback=self.callback_state, **extra_params_kwargs)) File "C:\sd.webui\system\python\lib\site-packages\torch\utils\_contextlib.py", line 115, in decorate_context return func(*args, **kwargs) File "C:\sd.webui\webui\repositories\k-diffusion\k_diffusion\sampling.py", line 145, in sample_euler_ancestral denoised = model(x, sigmas[i] * s_in, **extra_args) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "C:\sd.webui\webui\modules\sd_samplers_cfg_denoiser.py", line 169, in forward x_out = self.inner_model(x_in, sigma_in, cond=make_condition_dict(cond_in, image_cond_in)) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1501, in _call_impl return forward_call(*args, **kwargs) File "C:\sd.webui\webui\repositories\k-diffusion\k_diffusion\external.py", line 112, in forward eps = self.get_eps(input * c_in, self.sigma_to_t(sigma), **kwargs) File "C:\sd.webui\webui\repositories\k-diffusion\k_diffusion\external.py", line 138, in get_eps return self.inner_model.apply_model(*args, **kwargs) File "C:\sd.webui\webui\modules\sd_models_xl.py", line 37, in apply_model return self.model(x, t, cond) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1527, in _call_impl result = hook(self, args) File "C:\sd.webui\webui\modules\lowvram.py", line 54, in send_me_to_gpu module.to(devices.device) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1145, in to return self._apply(convert) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 797, in _apply module._apply(fn) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 797, in _apply module._apply(fn) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 797, in _apply module._apply(fn) [Previous line repeated 5 more times] File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 820, in _apply param_applied = fn(param) File "C:\sd.webui\system\python\lib\site-packages\torch\nn\modules\module.py", line 1143, in convert return t.to(device, dtype if t.is_floating_point() or t.is_complex() else None, non_blocking) torch.cuda.OutOfMemoryError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 4.00 GiB total capacity; 3.30 GiB already allocated; 0 bytes free; 3.47 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF ---

Is it because of my hardware?

32Go RAM and NVIDIA RTX A1000 (I think 4 GB RAM)...

Thanx in advance.

Regards

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-29 10:25 AM

Hi Ikabrams,

Thanks for the question.

You are facing a different issue, please try some general suggestions for the 'exit code 9009', such as this.

Are you sure that you uncompressed the folder to the root folder?

If you are still experiencing this problem, please contact your local support and we will investigate your case.

Have a happy New Year!

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2023-12-29 10:27 AM

Hi EM-A,

Thanks for the question.

Please note that the minimum system requirement to use the Add-on is: 8 GB of dedicated VRAM on Windows and 16 GB of RAM on Apple Silicon.

For the detailed requirements read the system requirements.

Have a Happy New Year!

Kind regards,

Technical Support Engineer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

2024-01-01 02:55 AM - edited 2024-01-02 12:17 AM

Just posting this as I wait on the download...

Still working on a successful download of the dmg...I think I've tried at least four different times since the release...not auspicious for the actual install or even, dare I dream, use...

I do have an M2 pro, but only 16MB ram...

can anyone comment on how long this took them to just download?

[edit to add]

I babysat the download and didn't let the machine go to sleep & it finally saved. I think the download was timing out when the computer went to sleep.

Install seems to have been successful.

First image created!